Boas-vindas à Galeria Prezi

Descubra as principais apresentações do Prezi e inspire-se na curadoria de nossos editores. Encontre exemplos de apresentações de uma ampla variedade de tópicos, setores e acontecimentos recentes.

#diversidadeeinclusão

Categorias populares

Veja os melhores vídeos das categorias mais populares

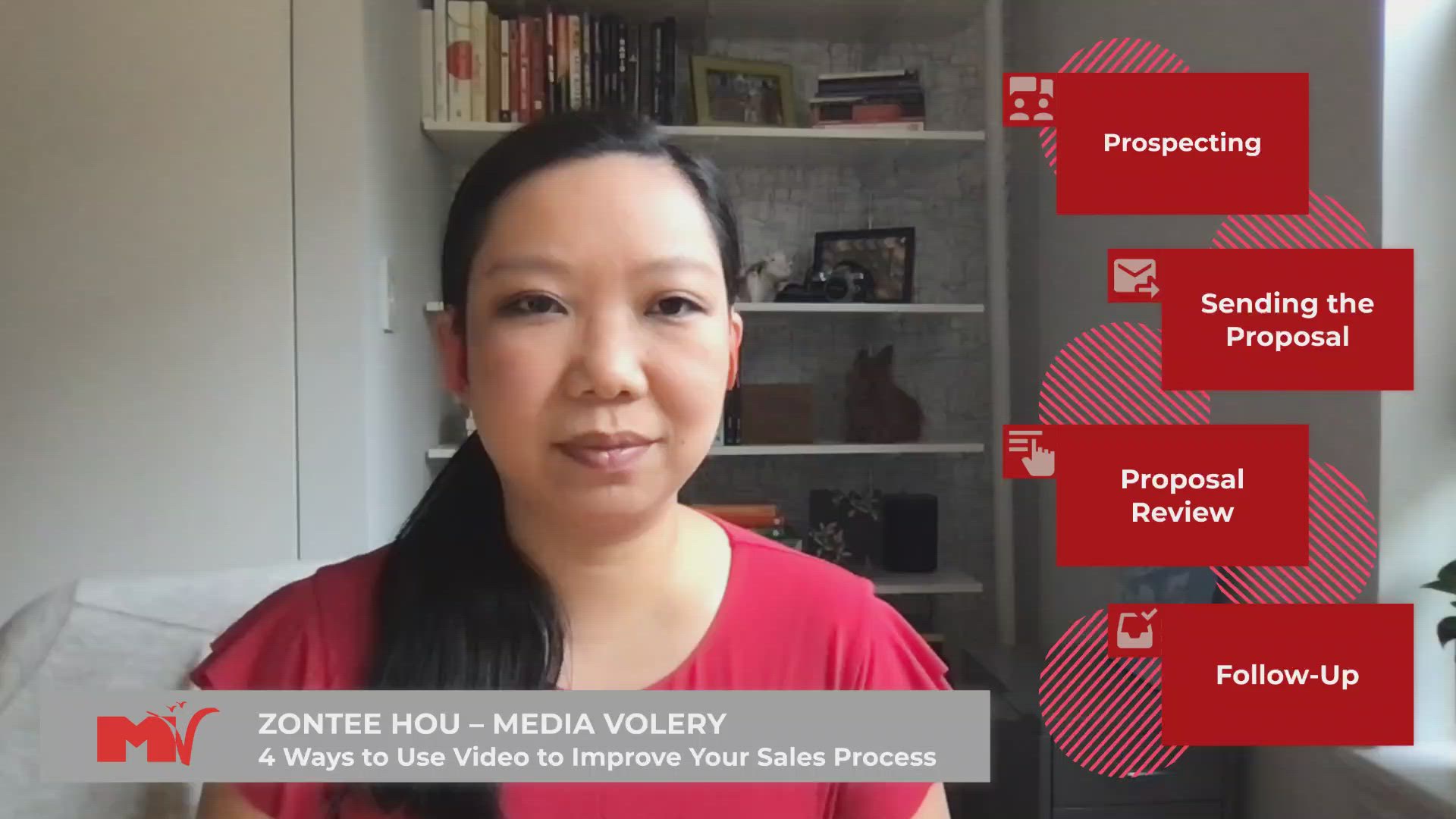

#VendasVirtuais

Veja como esses especialistas em vendas podem te ajudar a levar suas vendas virtuais para o próximo nível.

Ver todos os vídeos →#AprendizadoMisto

Veja como educadores estão usando vídeos de forma criativa para apoiar o ensino e a aprendizagem on-line.

Ver todos os vídeos →#TrabalhoHíbrido

Aprenda as práticas recomendadas e obtenha dicas de especialistas em tudo sobre trabalho remoto.

Ver todos os vídeos →